Child-Robot Interaction and Voice

Supervised by Prof. dr. V. Evers, dr. R.J.F. Ordelman & dr. K.P. Truong

I joined the CHATTERS (Children and AI Talking Trust and Responsible Spoken Search) project in October 2019, and it is the main project of my PhD at the HMI department of the University of Twente, NL. To read more about this project in general, see the HMI project page. Besides me, Thomas Beelen also works on this project as a PhD student and we often work together, while achieving our own goals.

Trust in Voice

Children bring their own challenges, but it's also a very interesting group to research. For example, children talk differently than adults, since they have a smaller vocabulary and make more mistakes. A robot needs to be able to understand the children nonetheless. Furthermore, the way to test things like likability, trust and humanlikeness is more challenging, since children are natural people-pleasers and standard, validated questionnaires are often too hard to understand.

This is why my general research question during my PhD is:

How can we evoke a critical attitude in children towards the information that the robot provides us?

For a short introduction to my research, you can watch this video:

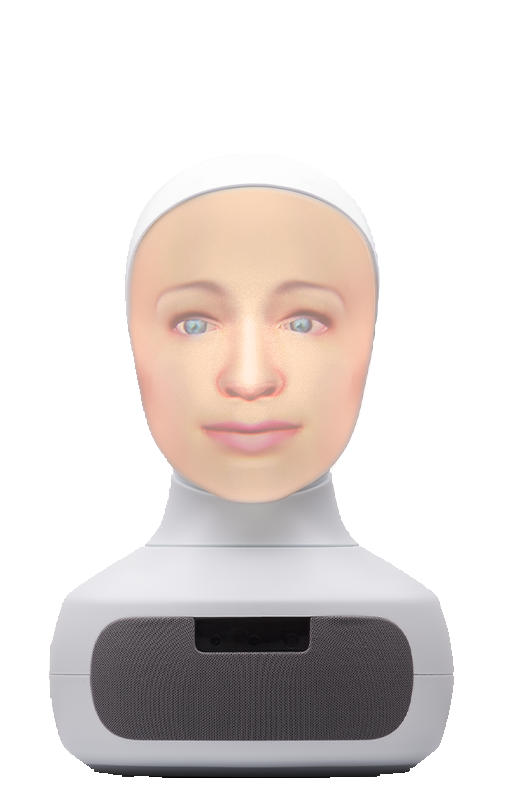

I will start by looking into the vocal and interaction features of the conversation, to see if there are, for example, typical differences in pitch when children do or do not trust the robot. Other things I might look at are facial expressions, gaze, and distance between children and the robot.

If I succeed in identifying these behavioral measures of trust, it will be of huge benefit to both the research field of child-robot interaction (and perhaps the broader child-agent interaction) and to robot manufacturers. Researchers will have a more objective way of assessing trust, and with no need to explicitly include the assessment in the experiment, with for example a trust game. The industry on the other hand will be able to use these measures to identify trust and perhaps adjust the robot's behavior to create a more personalized experience for the children.

For more information on this project and team, you'll soon be able to read more on our project website!